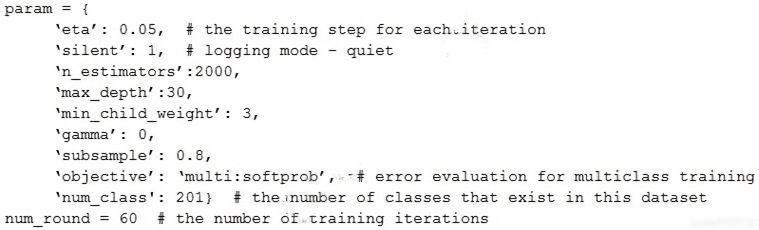

A Machine Learning Specialist is assigned to a Fraud Detection team and must tune an XGBoost model, which is working appropriately for test data. However, with unknown data, it is not working as expected. The existing parameters are provided as follows.

Which parameter tuning guidelines should the Specialist follow to avoid overfitting?

SophieSu

Highly Voted 2 years, 8 months agoMickey321

Most Recent 10 months, 3 weeks agoccpmad

11 months, 2 weeks agogcaria

1 year, 1 month agovbal

1 year, 1 month agoShailendraa

1 year, 10 months agoDr_Kiko

2 years, 8 months agocnethers

2 years, 9 months agoarulrajjayaraj

2 years, 8 months agocnethers

2 years, 9 months agocnethers

2 years, 9 months agocnethers

2 years, 9 months ago