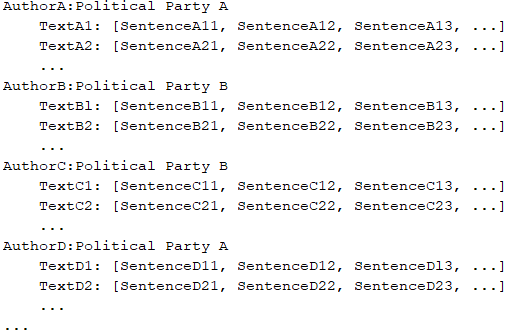

Your team is working on an NLP research project to predict political affiliation of authors based on articles they have written. You have a large training dataset that is structured like this:

You followed the standard 80%-10%-10% data distribution across the training, testing, and evaluation subsets. How should you distribute the training examples across the train-test-eval subsets while maintaining the 80-10-10 proportion?

rc380

Highly Voted 3 years, 11 months agosensev

3 years, 10 months agodxxdd7

3 years, 10 months agojk73

3 years, 9 months agojk73

3 years, 9 months agoinder0007

Highly Voted 4 years agoGogoG

3 years, 9 months agoDunnoth

2 years, 4 months agochibuzorrr

Most Recent 7 months, 1 week agoPhilipKoku

1 year, 1 month agogirgu

1 year, 1 month agotavva_prudhvi

2 years agoM25

2 years, 2 months agoJohn_Pongthorn

2 years, 4 months agoenghabeth

2 years, 5 months agobL357A

2 years, 10 months agosuresh_vn

2 years, 11 months agoggorzki

3 years, 5 months agoNamitSehgal

3 years, 6 months agoJobQ

3 years, 6 months agogiaZ

3 years, 4 months agopddddd

3 years, 9 months agoMacgogo

3 years, 9 months agoDanny2021

3 years, 10 months agogeorge_ognyanov

3 years, 9 months ago