Your company is performing data preprocessing for a learning algorithm in Google Cloud Dataflow. Numerous data logs are being are being generated during this step, and the team wants to analyze them. Due to the dynamic nature of the campaign, the data is growing exponentially every hour.

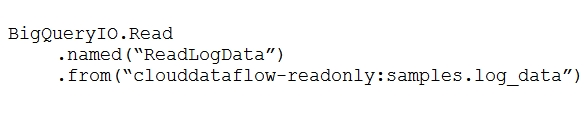

The data scientists have written the following code to read the data for a new key features in the logs.

You want to improve the performance of this data read. What should you do?

arthur2385

Highly Voted 2 years, 4 months agomaxdataengineer

Highly Voted 2 years, 3 months agomaxdataengineer

2 years, 3 months agocetanx

1 year, 7 months agoMaxNRG

Most Recent 1 year agoaxantroff

1 year, 1 month agopue_dev_anon

1 year, 1 month agortcpost

1 year, 2 months agoemmylou

1 year, 3 months agoaxantroff

1 year, 1 month agosuku2

1 year, 3 months agogudguy1a

1 year, 4 months agoodiez3

1 year, 5 months agoMathew106

1 year, 5 months agotheseawillclaim

1 year, 5 months agojkh_goh

1 year, 11 months agokelvintoys93

2 years, 1 month agoLestrang

1 year, 11 months agogcm7

2 years, 3 months agodevaid

2 years, 3 months agoKowalski

2 years, 3 months ago