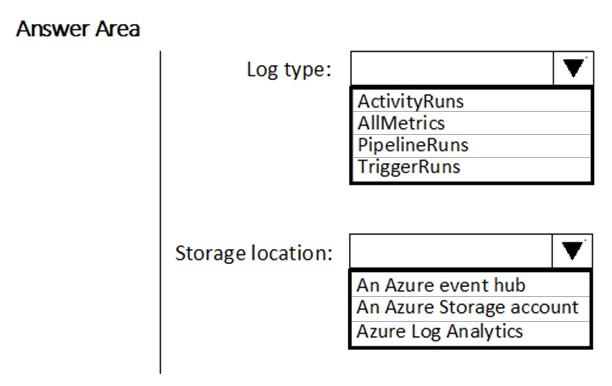

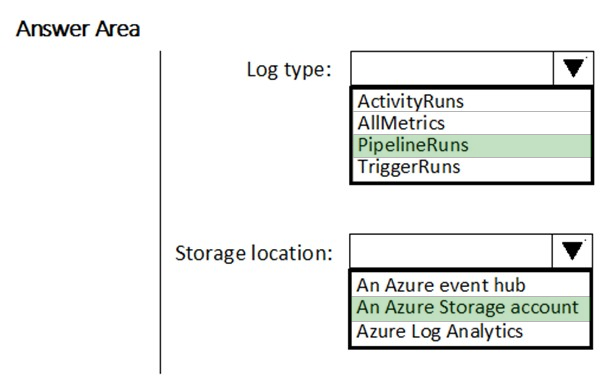

HOTSPOT -

You have a new Azure Data Factory environment.

You need to periodically analyze pipeline executions from the last 60 days to identify trends in execution durations. The solution must use Azure Log Analytics to query the data and create charts.

Which diagnostic settings should you configure in Data Factory? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

AUgigi

Highly Voted 5 years, 5 months agoavestabrzn

Highly Voted 5 years, 4 months agoPairon

Most Recent 4 years, 4 months agoakram786

4 years, 4 months agoAyeshJr

4 years, 6 months agosatyamkishoresingh

3 years, 10 months agoKRV

4 years, 7 months agoAyeshJr

4 years, 6 months agohoangton

4 years, 2 months agocalvintcy

4 years, 7 months agosyu31svc

4 years, 8 months agodumpsm42

4 years, 8 months agorsm2020

4 years, 11 months agokrisspark

5 years, 1 month agoakn1

5 years agoRohan21

5 years, 1 month agoamar111

5 years, 1 month agoamar111

5 years, 1 month agoSachinKumar2

5 years, 5 months ago