HOTSPOT -

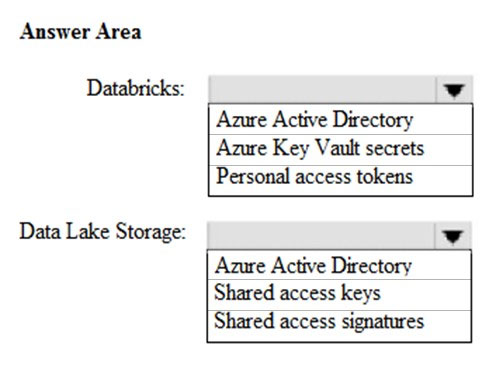

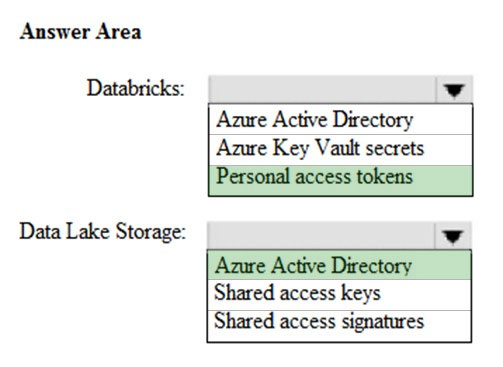

You use Azure Data Lake Storage Gen2 to store data that data scientists and data engineers will query by using Azure Databricks interactive notebooks. The folders in Data Lake Storage will be secured, and users will have access only to the folders that relate to the projects on which they work.

You need to recommend which authentication methods to use for Databricks and Data Lake Storage to provide the users with the appropriate access. The solution must minimize administrative effort and development effort.

Which authentication method should you recommend for each Azure service? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

remz

Highly Voted 5 years agodip17

Highly Voted 4 years, 11 months agomuni53

Most Recent 3 years, 8 months agoNeedium

4 years, 3 months agoNeedium

4 years, 3 months agolastname

4 years, 5 months agozarga

4 years, 4 months agosyu31svc

4 years, 6 months agolastname

4 years, 5 months agoM0e

4 years, 7 months agolastname

4 years, 5 months agoAsh666

4 years, 10 months agoazurearch

5 years agopawhit

5 years agoLeonido

5 years, 1 month agoLuke97

5 years, 1 month agoHCL1991

5 years, 1 month agoLeonido

5 years, 1 month ago