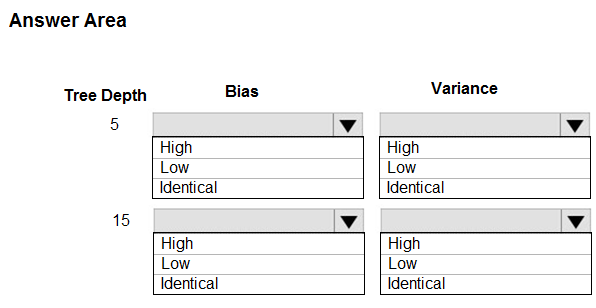

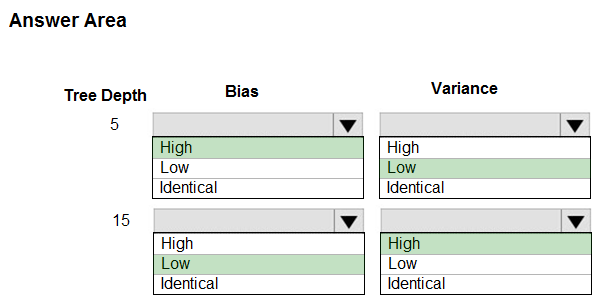

HOTSPOT -

You are using a decision tree algorithm. You have trained a model that generalizes well at a tree depth equal to 10.

You need to select the bias and variance properties of the model with varying tree depth values.

Which properties should you select for each tree depth? To answer, select the appropriate options in the answer area.

Hot Area:

dushmantha

Highly Voted 3 years, 4 months agoMatt2000

Most Recent 11 months, 1 week agophdykd

1 year, 10 months agoning

2 years, 7 months ago