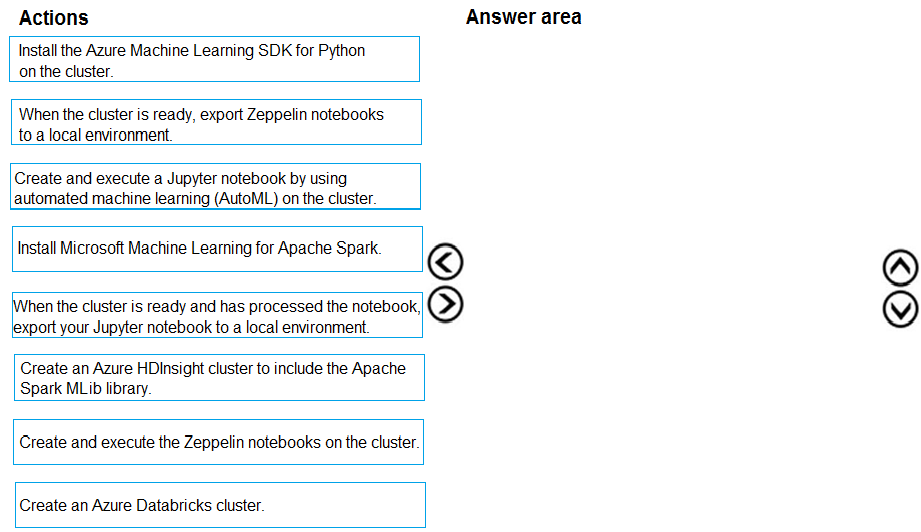

DRAG DROP -

You are building an intelligent solution using machine learning models.

The environment must support the following requirements:

✑ Data scientists must build notebooks in a cloud environment

✑ Data scientists must use automatic feature engineering and model building in machine learning pipelines.

✑ Notebooks must be deployed to retrain using Spark instances with dynamic worker allocation.

✑ Notebooks must be exportable to be version controlled locally.

You need to create the environment.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

Dasist

Highly Voted 4 years, 4 months agospaceykacey

3 years, 9 months agoprashantjoge

4 years, 2 months agoprashantjoge

4 years, 2 months agobruce

4 years, 3 months agoajay_1233456

Highly Voted 2 years, 11 months agoOdaNabunaga

Most Recent 1 year, 1 month agolarimalarima

1 year, 1 month agoPI_Team

2 years agophdykd

2 years, 6 months agoshubhangi2612

2 years, 6 months agoning

3 years, 2 months agoDingDongSingSong

3 years, 4 months agoajayjha123

3 years, 7 months agoRyanTsai

3 years, 10 months agodija123

3 years, 11 months agoAkki0120

4 years agoLutendo

3 years, 11 months agotamoor

4 years, 5 months agodzzz

4 years, 7 months agoSrivathsan

4 years, 6 months agoprashantjoge

4 years, 2 months agovalkyrieShadow

4 years, 8 months agoHkIsCrazY

4 years, 5 months agoprashantjoge

4 years, 2 months agoKaren_12321

4 years, 9 months agoLakeSky

4 years, 3 months agozehraoneexam

3 years, 4 months agosayak17

4 years, 10 months agosayak17

4 years, 10 months ago