HOTSPOT -

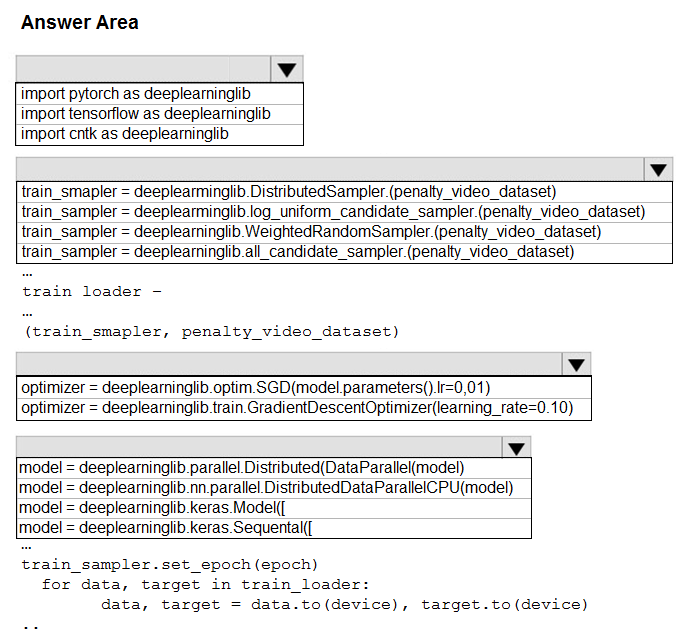

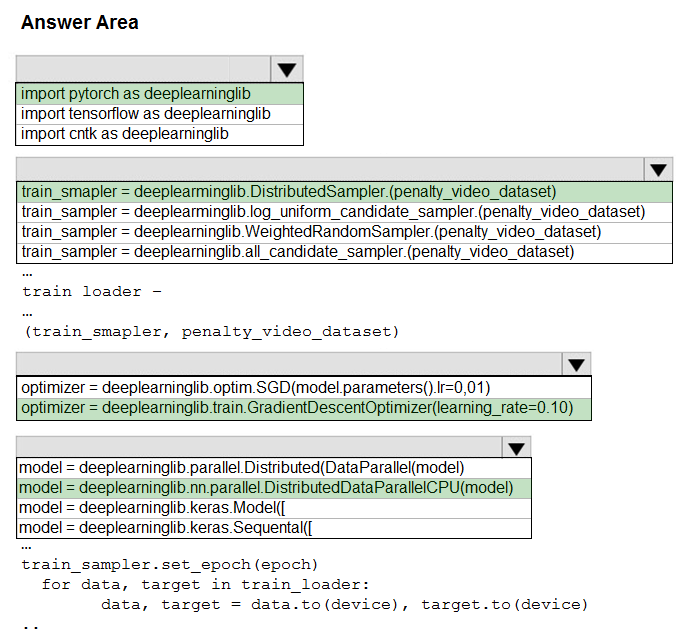

You need to use the Python language to build a sampling strategy for the global penalty detection models.

How should you complete the code segment? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

prashantjoge

Highly Voted 3 years, 1 month agodzzz

Highly Voted 3 years, 6 months agophdykd

Most Recent 11 months, 2 weeks agophdykd

1 year, 4 months agoning

2 years agofrida321

2 years, 9 months agockkobe24

2 years, 9 months agoYipingRuan

2 years, 11 months agoandre999

3 years agoluca2712

3 years, 5 months agowjrmffldrhrl

3 years, 4 months agolucho94

3 years, 6 months agowahaha

3 years, 6 months agokurasaki

3 years, 6 months agosim39

2 years, 10 months ago