Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are using Azure Machine Learning to run an experiment that trains a classification model.

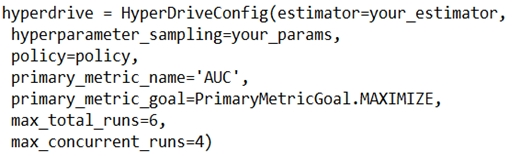

You want to use Hyperdrive to find parameters that optimize the AUC metric for the model. You configure a HyperDriveConfig for the experiment by running the following code:

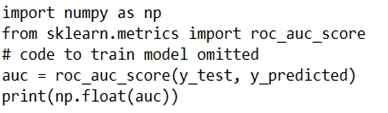

You plan to use this configuration to run a script that trains a random forest model and then tests it with validation data. The label values for the validation data are stored in a variable named y_test variable, and the predicted probabilities from the model are stored in a variable named y_predicted.

You need to add logging to the script to allow Hyperdrive to optimize hyperparameters for the AUC metric.

Solution: Run the following code:

Does the solution meet the goal?

chaudha4

Highly Voted 3 years, 8 months agoNarendra05

Highly Voted 3 years, 6 months agoevangelist

Most Recent 7 months, 2 weeks agosynapse

2 years, 10 months agoazurecert2021

3 years, 6 months agoanjurad

3 years, 8 months agolevm39

3 years, 9 months agodev2dev

3 years, 10 months agostonefl

3 years, 10 months agoAnty85

3 years, 9 months agocab123

3 years, 8 months agoVJPrakash

3 years, 5 months ago