HOTSPOT -

You design data engineering solutions for a company.

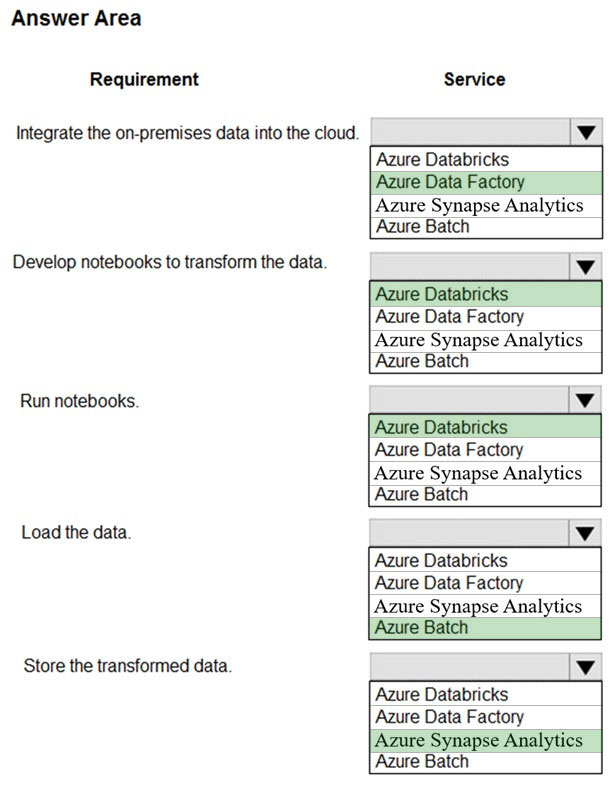

You must integrate on-premises SQL Server data into an Azure solution that performs Extract-Transform-Load (ETL) operations have the following requirements:

✑ Develop a pipeline that can integrate data and run notebooks.

✑ Develop notebooks to transform the data.

✑ Load the data into a massively parallel processing database for later analysis.

You need to recommend a solution.

What should you recommend? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Needium

Highly Voted 4 years, 2 months agomaciejt

4 years, 2 months agocadio30

4 years agoWendy_DK

Highly Voted 4 years agoBobFar

4 years agoBhagya123456

Most Recent 3 years, 9 months agotes

3 years, 11 months agoOus01

4 years agoVG2007

4 years, 1 month agoLarrave

3 years, 6 months agodavita8

4 years, 1 month agoaditya_064

4 years, 1 month agomaciejt

4 years, 2 months agoBobFar

4 years agoGeo_Barros

4 years, 2 months agoH_S

4 years, 2 months ago