Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You create a model to forecast weather conditions based on historical data.

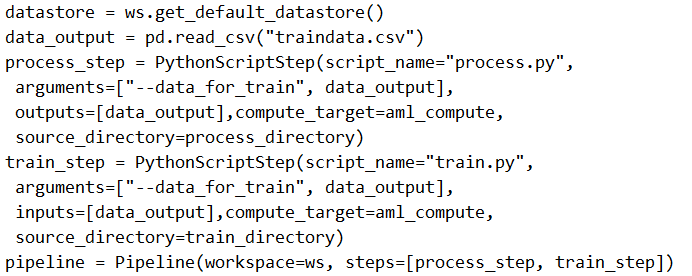

You need to create a pipeline that runs a processing script to load data from a datastore and pass the processed data to a machine learning model training script.

Solution: Run the following code:

Does the solution meet the goal?

kty

Highly Voted 2 years, 9 months agoML_Novice

2 years, 1 month agosnegnik

7 months, 2 weeks agodev2dev

Highly Voted 2 years, 9 months agosnegnik

Most Recent 7 months, 2 weeks agoskrjha20

2 years, 3 months agoSnowCheetah

2 years, 6 months ago