Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You create an Azure Machine Learning service datastore in a workspace. The datastore contains the following files:

✑ /data/2018/Q1.csv

✑ /data/2018/Q2.csv

✑ /data/2018/Q3.csv

✑ /data/2018/Q4.csv

✑ /data/2019/Q1.csv

All files store data in the following format:

id,f1,f2,I

1,1,2,0

2,1,1,1

3,2,1,0

4,2,2,1

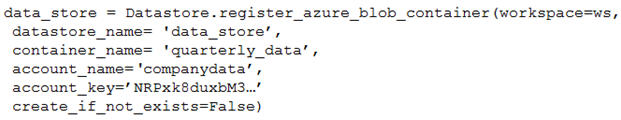

You run the following code:

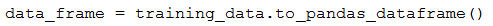

You need to create a dataset named training_data and load the data from all files into a single data frame by using the following code:

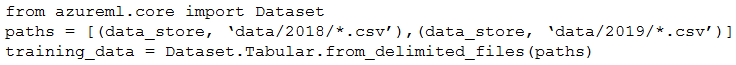

Solution: Run the following code:

Does the solution meet the goal?

PakE

Highly Voted 3 years, 4 months agobrendal89

Highly Voted 3 years, 4 months agojames2033

Most Recent 10 months agofhlos

1 year, 1 month agotherealola

2 years, 1 month agoazurelearner666

2 years, 4 months agoThornehead

2 years, 4 months agonick234987

2 years, 10 months agoslash_nyk

3 years, 1 month agosurfing

3 years, 1 month agorsamant

3 years, 2 months agoscipio

3 years, 3 months agotreadst0ne

3 years, 1 month agoali25

3 years, 4 months ago