Expert Verified, Online, Free.

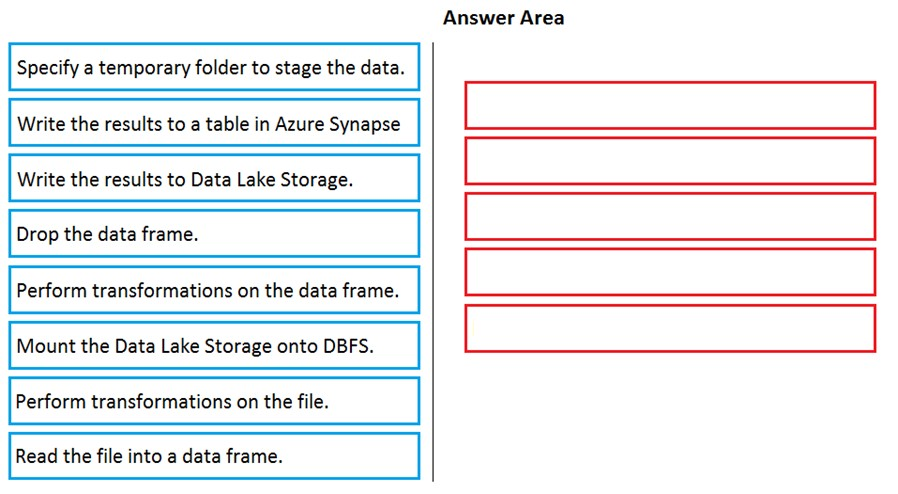

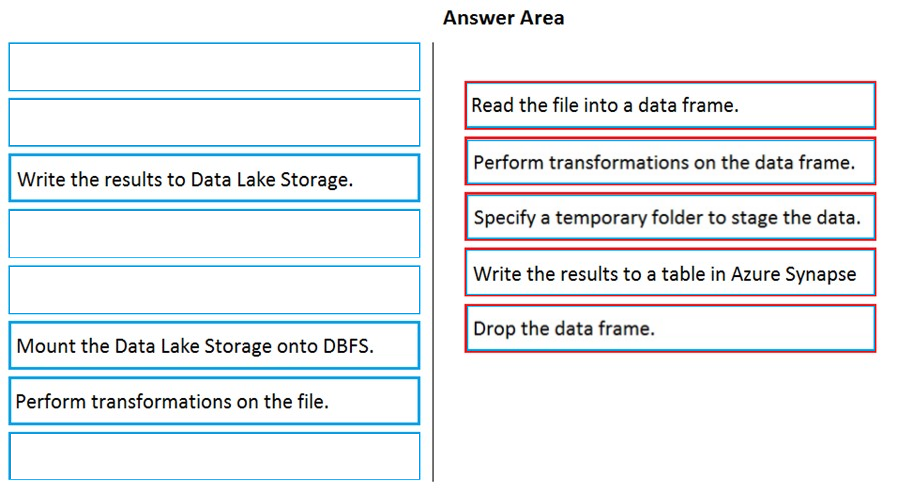

DRAG DROP -

You have an Azure Data Lake Storage Gen2 account that contains JSON files for customers. The files contain two attributes named FirstName and LastName.

You need to copy the data from the JSON files to an Azure Synapse Analytics table by using Azure Databricks. A new column must be created that concatenates the FirstName and LastName values.

You create the following components:

✑ A destination table in Azure Synapse

✑ An Azure Blob storage container

✑ A service principal

Which five actions should you perform in sequence next in a Databricks notebook? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

cadio30

Highly Voted 2 years, 11 months agoniwe

2 years, 11 months agomaciejt

2 years, 11 months agoniwe

2 years, 10 months agohoangton

Highly Voted 2 years, 10 months agoBhagya123456

Most Recent 2 years, 8 months agosatyamkishoresingh

2 years, 7 months agovrmei

2 years, 10 months agovrmei

2 years, 10 months agounidigm

2 years, 11 months agoRob77

2 years, 11 months agoAragorn_2021

3 years ago111222333

2 years, 11 months agotucho

3 years agoalf99

3 years agoDongDuong

3 years agoDongDuong

3 years ago