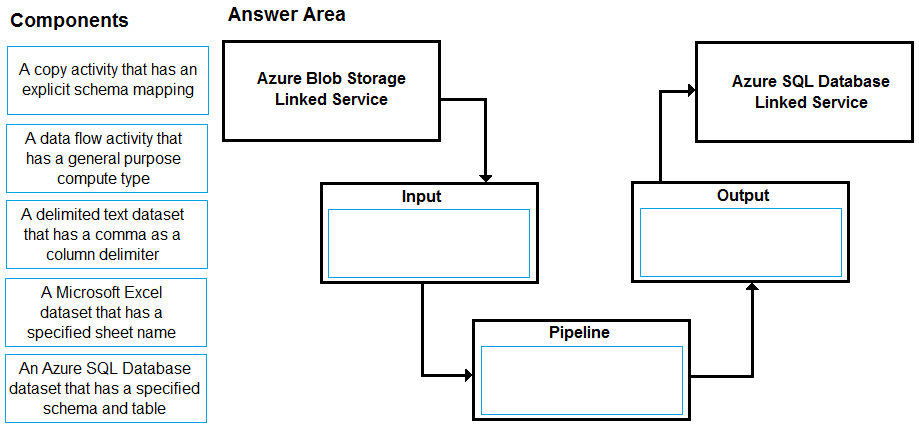

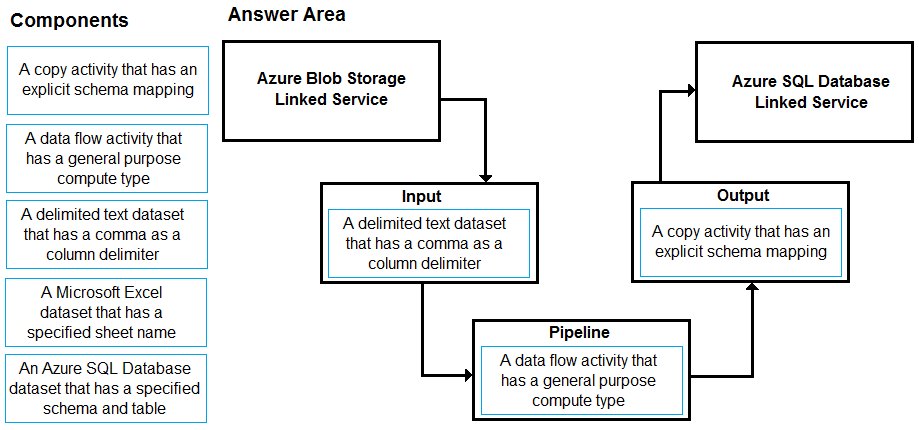

DRAG DROP -

You have a CSV file in Azure Blob storage. The file does NOT have a header row.

You need to use Azure Data Factory to copy the file to an Azure SQL database. The solution must minimize how long it takes to copy the file.

How should you configure the copy process? To answer, drag the appropriate components to the correct locations. Each component may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Select and Place:

suman13

Highly Voted 4 years, 2 months agoAngelRio

Highly Voted 4 years agoBitchNigga

Most Recent 4 years agocadio30

4 years agomaciejt

4 years, 1 month ago