HOTSPOT -

You have an Azure event hub named retailhub that has 16 partitions. Transactions are posted to retailhub. Each transaction includes the transaction ID, the individual line items, and the payment details. The transaction ID is used as the partition key.

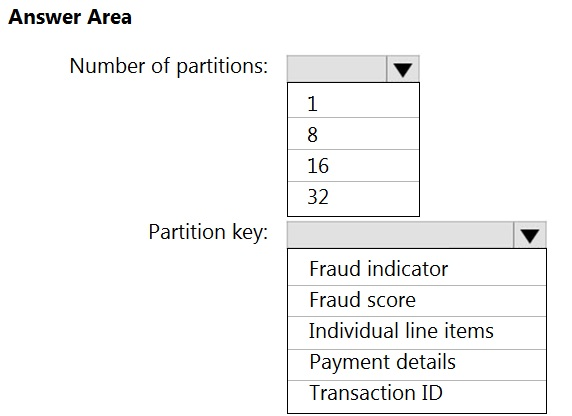

You are designing an Azure Stream Analytics job to identify potentially fraudulent transactions at a retail store. The job will use retailhub as the input. The job will output the transaction ID, the individual line items, the payment details, a fraud score, and a fraud indicator.

You plan to send the output to an Azure event hub named fraudhub.

You need to ensure that the fraud detection solution is highly scalable and processes transactions as quickly as possible.

How should you structure the output of the Stream Analytics job? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Preben

Highly Voted 3 years, 7 months agoLiz42

3 years, 3 months agoLiz42

3 years, 3 months agoMomoanwar

Highly Voted 1 year, 1 month agokkk5566

Most Recent 1 year, 5 months ago_Lukas_

1 year, 6 months agoDeeksha1234

2 years, 5 months agonelineli

2 years, 7 months agosdokmak

2 years, 8 months agoMaunik

3 years, 4 months agoAditya0891

2 years, 7 months agonichag

3 years, 6 months agorumosgf

3 years, 8 months agowwdba

2 years, 11 months agoDavico93

2 years, 7 months agombravo

3 years, 7 months agocaptainbee

3 years, 7 months ago