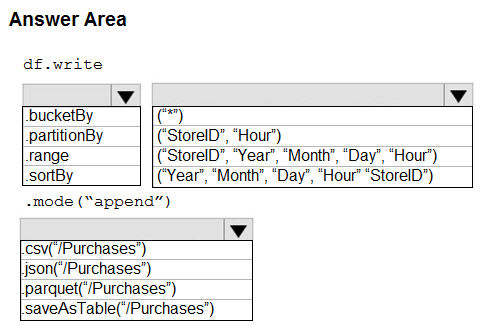

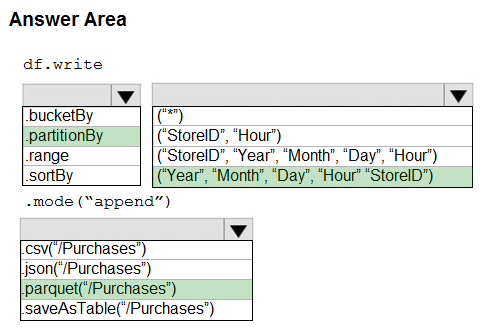

HOTSPOT -

You plan to develop a dataset named Purchases by using Azure Databricks. Purchases will contain the following columns:

✑ ProductID

✑ ItemPrice

✑ LineTotal

✑ Quantity

✑ StoreID

✑ Minute

✑ Month

✑ Hour

✑ Year

✑ Day

You need to store the data to support hourly incremental load pipelines that will vary for each StoreID. The solution must minimize storage costs.

How should you complete the code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

HichemZe

Highly Voted 1 year, 12 months agovalente_sven1

1 year, 10 months agoladywhiteadder

1 year, 4 months agoBacky

Highly Voted 1 year, 1 month agosincerebb

Most Recent 4 months, 1 week agoreachmymind

1 year, 5 months agoDaba

1 year, 6 months agoCindy_Lo

1 year, 10 months agolearnazureportal

1 year, 10 months agoo2091

1 year, 10 months ago