HOTSPOT -

A biomedical research company plans to enroll people in an experimental medical treatment trial.

You create and train a binary classification model to support selection and admission of patients to the trial. The model includes the following features: Age,

Gender, and Ethnicity.

The model returns different performance metrics for people from different ethnic groups.

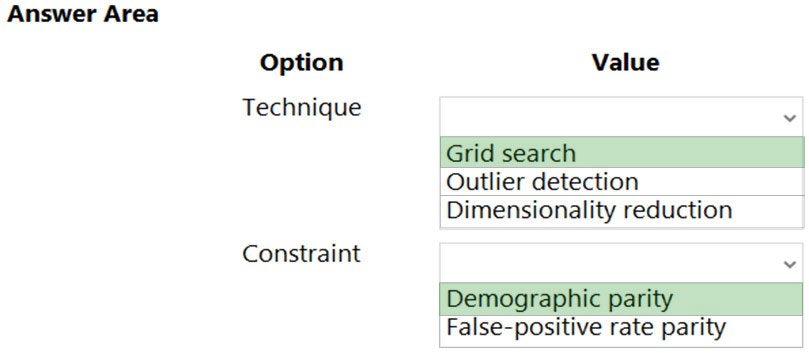

You need to use Fairlearn to mitigate and minimize disparities for each category in the Ethnicity feature.

Which technique and constraint should you use? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

phdykd

11 months, 2 weeks agosnegnik

1 year, 1 month agoYuriy_Ch

1 year, 4 months agophdykd

1 year, 4 months agofvil

1 year, 8 months agoning

2 years agoning

2 years agoranjsi01

2 years, 5 months ago