HOTSPOT -

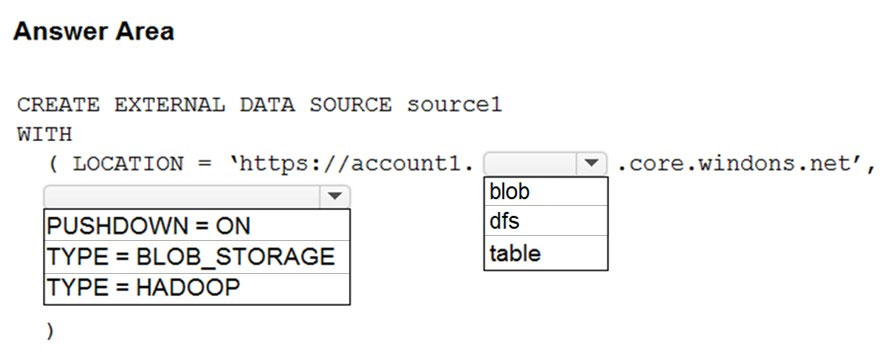

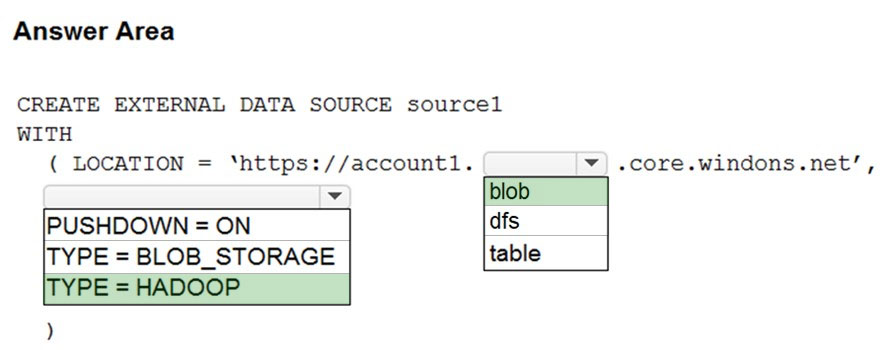

You have an Azure Synapse Analytics dedicated SQL pool named Pool1 and an Azure Data Lake Storage Gen2 account named Account1.

You plan to access the files in Account1 by using an external table.

You need to create a data source in Pool1 that you can reference when you create the external table.

How should you complete the Transact-SQL statement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

galacaw

Highly Voted 3 years, 2 months agoRob77

2 years, 1 month agopanda_azzurro

2 years, 5 months agosuvec

2 years, 2 months agojds0

2 years, 3 months agoVedjha

2 years, 4 months agoKure87

Highly Voted 2 years, 7 months agotlb_20

1 year, 2 months agovadiminski_a

2 years, 3 months agosuvec

2 years, 2 months agoJustImperius

5 months, 1 week agoSebastian1677

2 years, 6 months agosamianae

Most Recent 5 months agoff5037f

8 months agoypan

12 months agoCharley92

1 year, 2 months agodgerok

1 year, 2 months agoankeshpatel2112

1 year, 2 months agoAlongi

1 year, 3 months agoMomoanwar

1 year, 6 months agoMomoanwar

1 year, 6 months agofahfouhi94

1 year, 9 months agokkk5566

1 year, 9 months agokdp203

1 year, 10 months agoauwia

2 years agoauwia

2 years agoaga444

2 years agojanaki

2 years, 1 month ago