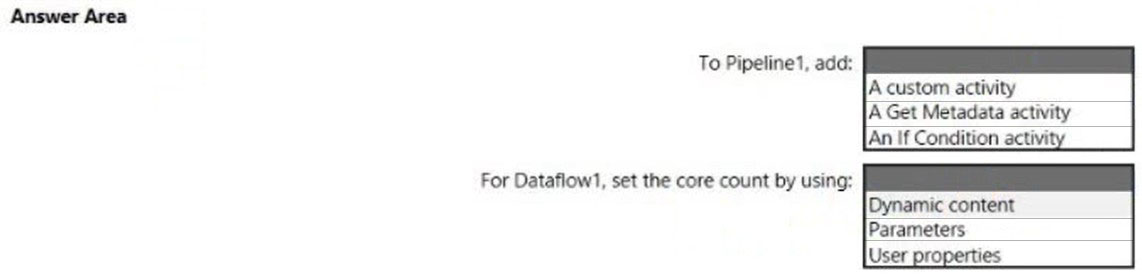

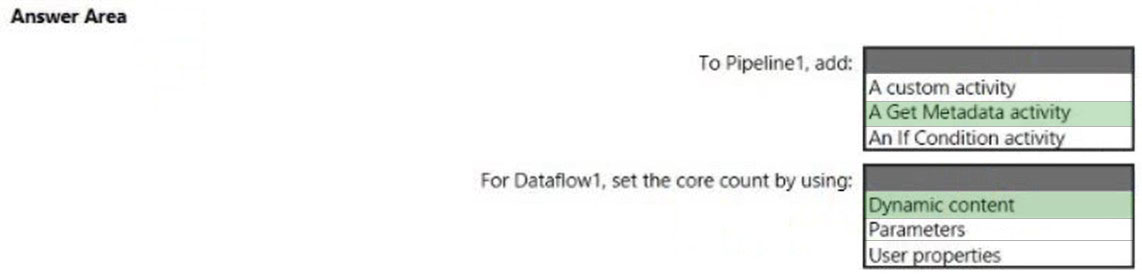

HOTSPOT -

You have an Azure Synapse Analytics pipeline named Pipeline1 that contains a data flow activity named Dataflow1.

Pipeline1 retrieves files from an Azure Data Lake Storage Gen 2 account named storage1.

Dataflow1 uses the AutoResolveIntegrationRuntime integration runtime configured with a core count of 128.

You need to optimize the number of cores used by Dataflow1 to accommodate the size of the files in storage1.

What should you configure? To answer, select the appropriate options in the answer area.

Hot Area:

dom271219

Highly Voted 2 years, 9 months agodmitriypo

Highly Voted 2 years, 7 months agoiceberge

Most Recent 11 months agoAlongi

1 year, 4 months agokkk5566

1 year, 9 months agoanks84

2 years, 9 months ago